The EEAS has uncovered a coordinated anti-Western campaign on Facebook that leans on sophisticated techniques to hide its creators tracks.

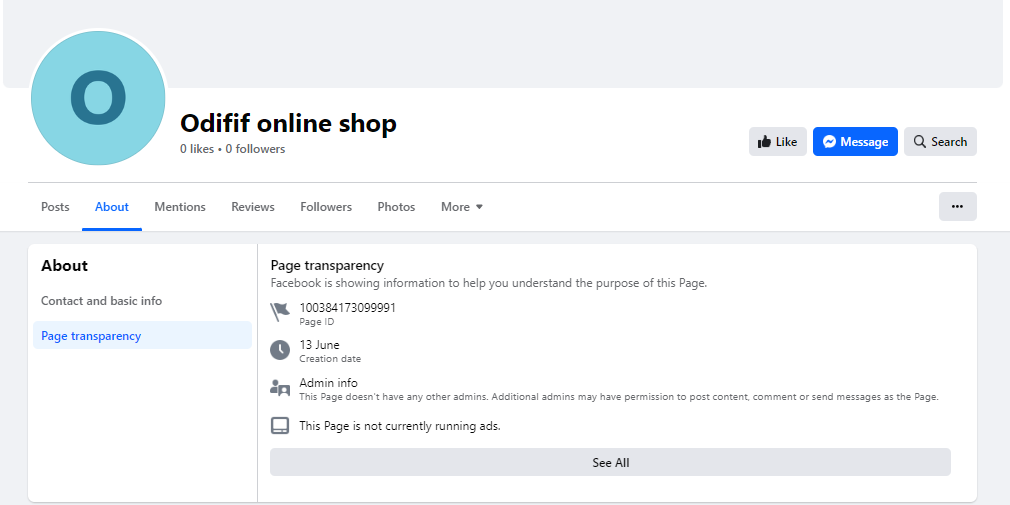

Advertising is a big driver of the digital economy. But not all that glitters is gold. Recently, we detected over 1,000 sponsored Facebook posts, 188 of them unique, promoting anti-Western and anti-Ukrainian content. Facebook pages masquerading as online shops and categorised as ‘Musician/Band’ pages were the source of these ads.

The manipulation campaign we unravelled was – or rather is, as some of the pages are still online – international in nature. Out of the 658 ads we could access at the time of discovery, 318 were in German, 321 in French, 14 in Ukrainian, and 5 in Hebrew.

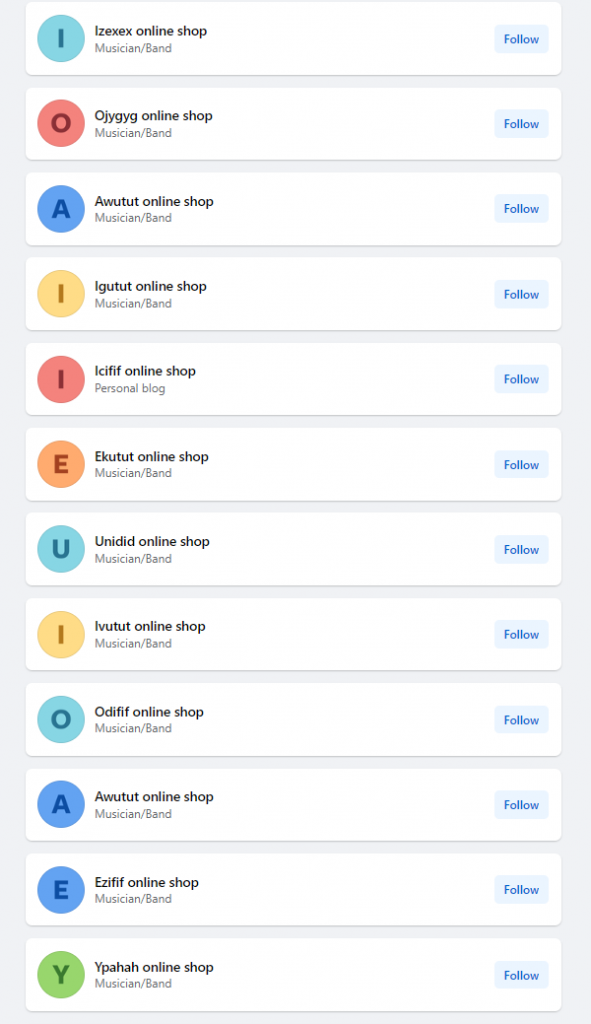

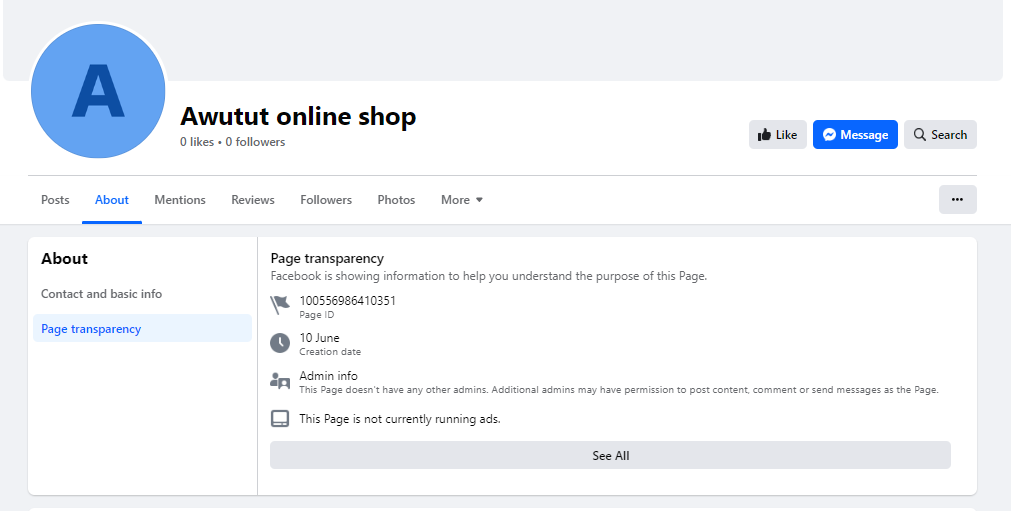

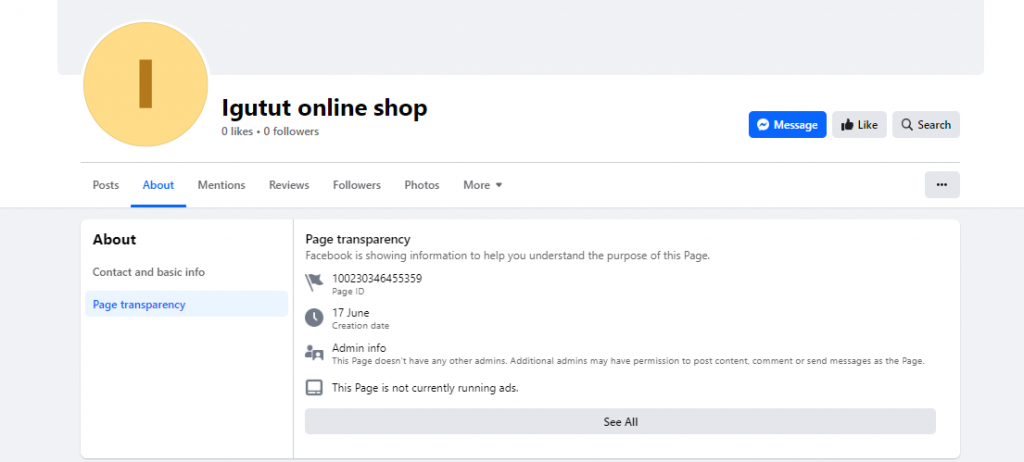

The actors behind the campaign put in a good effort to hide their tracks. Out of 1,000 total ads, every fourth one contained a web address belonging to 105 distinct domains. The Facebook pages promoting the ads were all created between June and October of this year. Apart from showcasing the ads, these pages show no other activity, and do not have any followers or a profile picture. The usernames of these pages follow a specific pattern: each consists of six letters arranged as vowel-consonant-vowel-consonant-vowel-consonant (e.g. Axamam, Umowow, Ipejej).

Screenshot showing some of the other ‘dormant accounts’ whose usernames were built using the same structure.

Screenshots showing that the accounts were created in the same timeframe and with similar info.

Screenshots showing accounts from the same network running the same sponsored ads during the same timeframe with identical text and images

Let us unpack the case.

Whodunit?

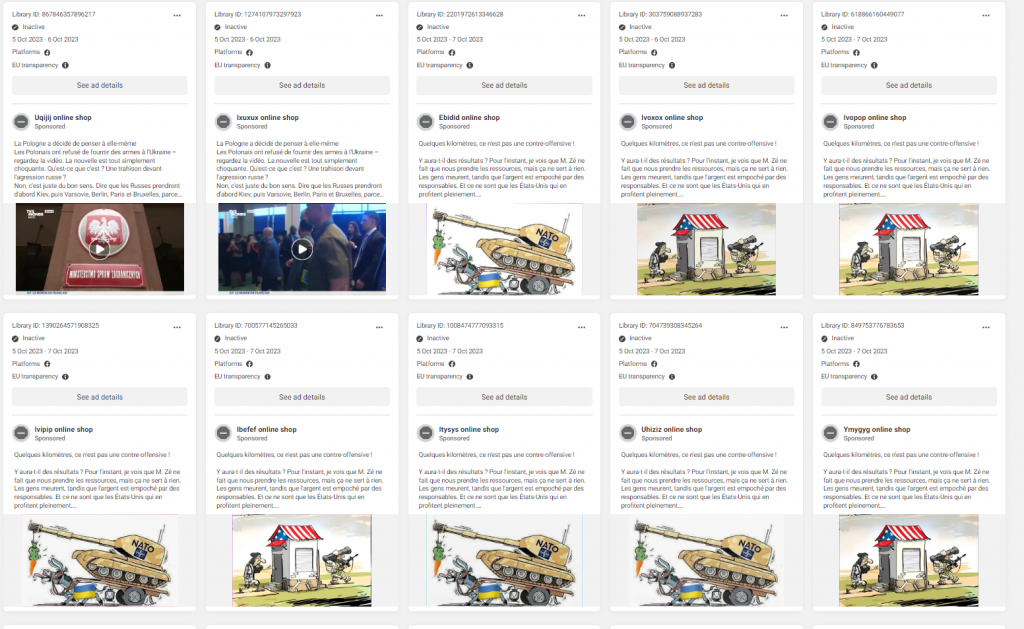

Content-wise, the sponsored posts in question align neatly with material often promoted by pro-Kremlin actors. Some examples include cartoons targeting the West, edited pictures showing fake graffiti, and videos reframed to target the reputation of EU officials. We also found visuals from fake magazine covers, fake video ads impersonating Western companies, fake videos impersonating Ukrainian outlets, and even fake postal stamps.

Screenshot of a now-removed sponsored post targeting HRVP Josep Borrell

That said, none of the 105 domains mentioned in the sponsored posts have a clear connection to known parts of the Russian FIMI-sphere. Instead, these domains appear to be associated with various businesses, online services, or adult content websites. A significant number are hosted on a server in Malaysia owned by a company called Shinjiru Technology Sdn Bhd. Examples include solonggoodbye.com, tastroid.com, and cytteek.com. These companies might not be knowingly spreading pro-Russian disinformation. Instead, they could have been hacked. Additionally, the digital certificates authenticating the identity of these domains, or SSL certificates, were renewed just a few days before they started being used on Facebook. These domains are no longer active.

However, the Atlantic Council’s Digital Forensic Research Lab (DFRLab) recently identified at least three sponsored posts that promoted the domain thumbra.com. That domain, in turn, redirected users to a fake article on a website impersonating the Ukrainian Independent Information Agency. As of 13 November, this domain has become unresponsive and no longer redirects to the fake site.

DFRLab researchers suggest that ‘multiple aspects of the forgery point to it likely being of Russian origin’. This judgment is in line with our findings that Russia’s FIMI ecosystem often uses URL redirection in their information manipulation campaigns. The practice is fairly straightforward. Perpetrators manipulate the source code of a webpage or a domain so it redirects visitors to another domain automatically. This technique is very effective when malicious actors want to continue sharing links leading to domains marked as inauthentic by the platforms or to Kremlin-controlled outlets sanctioned by the EU. Instead of using a blocked URL , they use an alternative that redirects users, thus circumventing platforms’ efforts to limit access to sanctioned websites.

A series of accounts likely engaging in coordinated inauthentic behaviour on X used this technique to redirect users to articles published on websites impersonating legitimate outlets. The domains used were pakgala.org and bigagenda.com. Both domains went through the same digital certificate changes as the domains promoted in the sponsored posts described above. Specifically, the SSL certificates of both domains changed the same day they started redirecting to an impersonator domain.

Large audience targeted by ads

From what we can see, the manipulative campaign spread to a large online audience. The reach of the sponsored posts in the information manipulation campaign varied greatly, ranging from posts viewed around 2,000 times to posts reaching almost 40,000 views across the EU. Based on that, the total number of views for all the sponsored posts could have ranged anywhere between 3 and 10 million views.

To be clear, Meta defines the reach of sponsored posts as the estimated number of accounts in the EU that see an ad at least once. This may include multiple views from the same accounts.

The cost of the campaign is near impossible to assess, as Facebook has suspended some of the ads. In any case, Facebook offers limited information about its ad prices.

What next?

In addition to raising awareness of the issue, we flagged our findings to Meta for them to validate. After an independent investigation, the platform confirmed that some ads were linked to a coordinated inauthentic behaviour network exposed by Meta in their last Adversarial Threat Report.

While Meta removed some posts, quite a few of them remain online. In fact, searches we performed though Facebook using the names of the pages mentioned above reveal another set of suspicious pages. These additional accounts, currently dormant, share the same naming convention, creation timeframe, categorisation, and similar lack of followers as the other pages.

There is a good chance that these new pages are interconnected. We are already seeing one of them participating in a new manipulation campaign focussing on the Israel-Hamas war, spreading the false if familiar narrative of Ukrainian weapons being sold to Hamas.