Recently, Facebook reported on more than 150 coordinated inauthentic operations it has identified, reported, and disrupted. We have already discussed the meaning of these 150 takedowns. Clearly, the platform has advanced its content moderation, but beyond that, many covert perpetrators of disinformation have also evolved their tactics, Facebook warns.

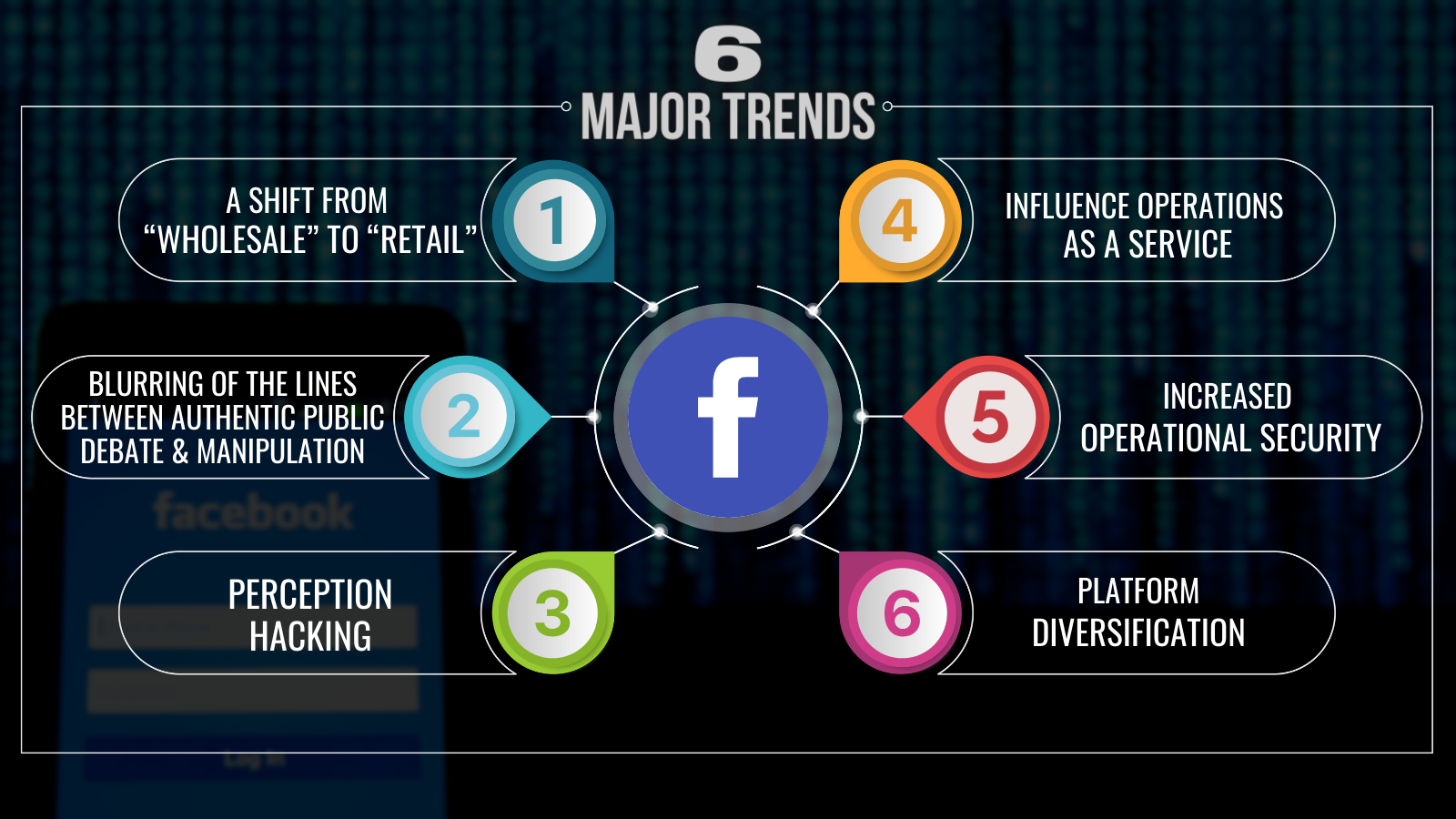

Despite Facebook’s progress, the report is no reason to lean back, as threat actors are adapting to Facebook’s enforcement too. According to the platform, an increasingly complex game of whack-a-mole is evolving. Facebook’s team outlines six major trends:

The first trend is a shift from ‘wholesale’ to ‘retail’. Threat actors pivot from widespread, noisy deceptive campaigns to smaller, more targeted operations. An example is a case from early 2020, when Facebook found and removed a network run by Russian military intelligence. Focusing on Ukraine and neighbouring countries, and armed with fake personas that operated across blogging forums and multiple social media platforms, the network engaged policymakers, journalists and other public figures.

Retail operations come costly for spreaders of disinformation, and Facebook claims their return on investment is meagre. This might change with incoming technological advancements. For example, last week, Georgetown University released a report on automation and disinformation. The researchers analysed how GPT-3, an artificial intelligence (AI) system that writes text, will probably be used in disinformation campaigns. Hostile actors could use automation to launch highly scalable disinformation campaigns with less manpower than is currently required, the researchers warn:

‘This observation sheds light on a simple fact: while the U.S. discussion around Russian disinformation has centered on the popular image of automated bots, the operations themselves were fundamentally human, and the IRA [The Internet Research Agency, a company based in Russia and linked to the Russian government] was a bureaucratic mid-size organization like many others.’

The second trend Facebook sees is the blurring of lines between authentic public debate and manipulation. For example, in July 2018, Facebook eliminated a network connected to the IRA that was engaging with pre-planned, authentic events. The network’s goal was to target events related to divisive issues and volunteer to amplify them on behalf of the local organisers.

Thirdly, Facebook talks about ‘perception hacking’. Threat actors seek to capitalise on the public’s fear of influence operations (IOs) to create the false perception of widespread manipulation of electoral systems, even without evidence. For example, in the waning hours of the 2018 US midterm elections, Facebook tracked down an operation by the IRA that claimed they were running thousands of fake accounts with the capacity to sway the election results across the United States. They even created a website — usaira[.]ru — complete with an ‘election countdown’ timer where they offered up as evidence of their claim nearly a hundred recently created Instagram accounts. The power of the self-fulfilling prophecy in disinformation.

The fourth trend Facebook sees is ‘influence operations as a service’. Commercial actors run influence operations both domestically and internationally, providing deniability to their customers and making influence operations more widely available. Over the past four years, Facebook has investigated and removed influence operations conducted by commercial actors including media, marketing and public relations companies. Unfortunately, Facebook does not offer any quantitative information about this trend.

Fifthly, Facebook outlines the trend of increased operational security. Experienced actors have significantly improved their ability at hiding their identities, using technical obfuscation and witting and unwitting proxies. In response to increased efforts at stopping them, the more sophisticated threat actors — including from Russia and China — have improved their operational security. They are more disciplined to avoid careless mistakes, for example by logging into purportedly American accounts from St. Petersburg in Russia. For example, in October 2019, Facebook removed a Russian IRA-linked network that was among the first to target the US 2020 election. The network primarily posted other people’s content, including memes with minimal or no text in English, and screenshots of social media posts by news organisations and public figures.

Sixthly, platform diversification. To evade detection and diversify risks, operations target multiple platforms (including smaller services) and the media, and rely on their own websites to carry on the campaign. By running operations on multiple platforms, threat actors are likely trying to ensure that their efforts survive enforcement by any given platform. They have also targeted hyper-local platforms (local blogs and newspapers) to reach specific audiences and to target public spaces with less resourced security systems.

Mitigating the treats

‘We anticipate seeing more local actors worldwide who attempt to use IO tactics to influence public debate in their own countries, further blurring the lines between authentic public debate and deception. In turn, technology platforms, traditional media and civil society will be faced with more challenging policy and enforcement choices,’ says Facebook. Facebook proposes taking specific steps that will make influence operations less effective, easier to detect, and more costly. Below, we will discuss a few of them.

Whole-of-society and building deterrence

One area where a whole-of-society approach is impactful is in discouraging perpetrators to spread disinformation. While platforms can take action within their boundaries, both societal norms and regulation against IO and deception, including when done by authentic voices, are critical to deterring abuse and protecting public debate. In March, we presented an overview of recent research on how to build deterrence.

Facebook also argues that disinformation flourishes in the absence (of information, which is also consistent with a Russian strategic paper we spotted last year. In early February 2020, the Russian Institute for Strategic Studies (RISS), a Kremlin-funded think tank, published an essay titled, ‘Securing Information for Foreign Policy Purposes in the Context of Digital Reality’. The paper claimed that:

‘A preventively shaped narrative, answering to the national interests of the state, can significantly diminish the impact of foreign forces’ activities in the information sphere, as they, as a rule, attempt to occupy “voids” [in the information flow].’

Preventing disinformation from occupying these voids is what the EUvsDisinfo campaign does. We develop communication products and campaigns designed to explain EU values, interests and policies in the Eastern Partnership countries. Additionally, we report on and analyse disinformation trends, expose disinformation narratives, and raise awareness of the negative impact of disinformation disseminated in the Eastern neighbourhood’s information space and beyond.

Source: FB, Threat Report, 2017-2020

The unknown knowns and unknown unknowns

Even though Facebook improved its capacities to detect influence operations, we don’t know which inauthentic networks Facebook might have missed, and we don’t exactly know what else is happening on the platform, perhaps even beyond our imagination. In any case, Facebook’s knowledge and takedown capabilities remain crucial in the fight against disinformation and in the effort to impose higher costs on its perpetrators.

Ben Nimmo, a co-author of the report, put it very simply on Twitter:

‘More operators are trying, but more operators are also getting caught. The challenge is to keep on advancing to stay ahead and catch them.’