By Wasim Ahmed, Joseph Downing, Marc Tuters, Peter Knight, for The Conversation

In times of crisis conspiracy theories can spread as fast as a virus.

As the coronavirus pandemic tightened its grip on a world which struggled to comprehend the enormity of the situation it was facing, darker forces were concocting their own narratives.

Scientists and researchers were working – and continue to work – around the clock for answers. But science is slow and methodical. So far-fetched explanations about how the outbreak started began filling the vacuum. Among these strange explanations is a theory that the recent rollout of 5G technology is to blame. But where did this theory begin, how did it develop and mutate and what can be done to stem the tide of fake news? We asked four experts who have all done extensive research in this area to examine these questions.

Marc Tuters, assistant professor of new media and digital culture at the University of Amsterdam, and Peter Knight, professor of American studies at the University of Manchester, examine the big questions and the history of conspiracy theories. Then Wasim Ahmed, lecturer in digital business at Newcastle University, and Joesph Downing, a nationalism research fellow at the London School of Economics, share the results of their new study into the origins of the 5G conspiracy theory on social media.

A toxic cocktail of misinformation

Marc Tuters and Peter Knight

Conspiracy theories about mobile phone technology have been circulating since the 1990s, and have long historical roots. Doctors first talked of “radiophobia” as early as 1903. Following on from fears about power lines and microwaves in the 1970s, opponents of 2G technology in the 1990s suggested that radiation from mobile phones could cause cancer and that this information was being covered up. Other conspiracy theories about 5G include the idea that it was responsible for the unexplained deaths of birds and trees. The coronavirus 5G conspiracy theory comes in several different strains, of varying degrees of implausibility.

One of the first versions of the theory claimed that it was no coincidence that 5G technology was trialed in Wuhan, where the pandemic began (this is incorrect, as 5G was already being rolled out in the number of locations). Some claim that the coronavirus crisis was deliberately created in order to keep people at home while 5G engineers install the technology everywhere. Others insist that 5G radiation weakens people’s immune systems, making them more vulnerable to infection by COVID-19. Another mutation of the 5G conspiracy theory asserts that 5G directly transmits the virus. These different 5G stories are often combined together with other COVID-19 conspiracy theories into a toxic cocktail of misinformation.

At first, some conspiracy theorists insisted that the threat of the virus (and the apparent death rates) had been exaggerated. Echoing President Donald Trump’s own language, some of his supporters considered this as part of an elaborate “hoax” intended to harm his chances of re-election. Others, particularly on the far right in the US, framed lockdown emergency measures in terms of “Deep State” efforts at controlling the population and called for a “second civil war” in response.

Other prominent theories include the claim that the virus was accidentally released by the Wuhan Institute of Virology, or that it was deliberately made as a biowarfare weapon, either by the Chinese or the Americans. One increasingly popular idea is that the pandemic is part of a plan by global elites like Bill Gates or George Soros – in league with Big Pharma – to institute mandatory worldwide vaccinations that would include tracking chips, which would then be activated by 5G radiowaves.

Polling data in various countries including the UK, the US, France, Austria, and Germany has shown that the most popular coronavirus conspiracy theory is that the virus was man-made – 62% of respondents in the UK think that this theory is true to some degree. In that UK poll, 21% agreed, to varying extents, that coronavirus is caused by 5G and is a form of radiation poisoning transmitted through radio waves. In comparison, 19% agreed that Jews have created the virus to collapse the economy for financial gain.

Where did these theories come from?

Few of these theories are new. Most of them are mutations or re-combinations of existing themes, often drawing on narrative tropes and rhetorical manoeuvres that have a long history. Conspiracy theorists usually have a complete worldview, through which they interpret new information and events, to fit their existing theory. Indeed, one of the defining characteristics of conspiracy thinking is that it is self-sealing, unfalsifiable and resistant to challenge. The absence of evidence is, ironically, often taken as evidence of a massive cover up.

The dismissal of the pandemic as a hoax and the questioning of scientific experts is straight out of the playbook of climate change denial. The 5G theory about radiowaves transmitting or activating the virus, for example, is a reworking of long running conspiracy fears about mind control experiments, subliminal messaging and supposed secret US military weapons projects (all ripe topics for Hollywood’s movie industry).

The 5G story shares similarities with rumours that date back to the 1990s about HAARP (the US military’s High Frequency Active Auroral Research Program). HAARP was a large radio transmitter array located in Alaska and funded by the US Department of Defence, in conjunction with a number of research universities. The programme conducted experiments into the ionosphere (the upper layer of the atmosphere) using radio waves, and was closed down in 2014. Conspiracy theorists, however, claimed that it was actually developing a weapon for weather control as well as mind control. Similarly, concerns have been expressed concerning that 5G might be in fact be a hi-tech weapon whose use represents an “existential threat to humanity”.

There have also long been conspiracy rumours that Big Pharma is suppressing a cure for cancer. The idea that the virus was made in a lab mirrors claims made a quarter of a century ago about HIV/AIDS. One origin for that story was an early example of a KGB disinformation campaign. The allegation that the Bill and Melinda Gates Foundation or George Soros planned the coronavirus pandemic is a version of familiar right-wing (and often racist and antisemitic) conspiracy fantasies about “globalist” elites threatening national and individual sovereignty. There is mounting evidence that far-right groups are opportunistically using fear and uncertainty surrounding the pandemic to promote their hateful politics.

Populist conspiracy theories often work by dividing the world into Us vs Them, with the aim of scapegoating people and institutions and providing simple explanations for complex phenomena. The 5G coronavirus conspiracy theories are particularly challenging because they bring together people from very different parts of the political spectrum. On the one hand, they attract the far-right who see them as part of a technological assault by big government on the freedom of individuals. On the other, they appeal to the well established anti-vaxxer community, who are often allied with those distrustful of Big Pharma.

In the US, which is in an election year, coronavirus mitigation strategies have become a divisive culture war issue, with the president refusing to wear a face mask. But in countries like Germany anti-lockdown issues appear to be creating connections across the political spectrum, led by social media influencers who are working to connect the dots between previously separate conspiracy theory communities or tribes.

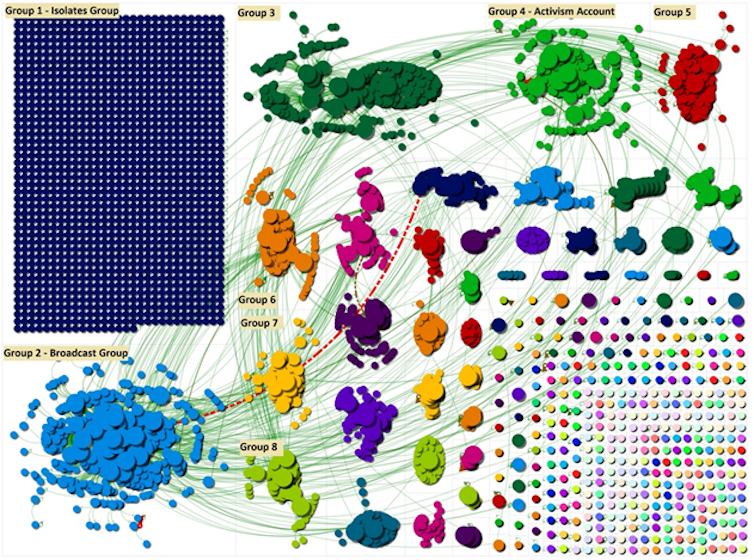

As seen in the quantitative analysis below, such influencers anchor conspiracy theory communities on social media. Because these methods provide only a partial view, it is problematic to assume that the members of these communities are necessarily trapped within echo chambers, unable to access other points of view. Nevertheless the findings do correspond with the troubling patterns outlined above. And they also show that those who believe in and propagate conspiracy theories can come from a cross section of society.

Social network analysis

Wasim Ahmed and Joseph Downing

Our study set out to investigate the 5G conspiracy theory on Twitter towards the beginning of April 2020 which was when the conspiracy was trending in the UK and increasing its visibility.

This time period coincided with reports that at least 20 UK 5G phone masts were vandalised, including damage reported at a hospital. There were also 5G arson attacks across continental Europe during this time.

Our research set out to uncover who was spreading the conspiracy theory, the percentage of users who believed the theory and what steps were needed to combat it. We used a tool called NodeXL to carry out a social network analysis. NodeXL is a Microsoft Excel plugin which can be used to retrieve data from a number of social media platforms such as Twitter.

We captured data using the “5Gcoronavirus” keyword which also retrieved tweets with the #5GCoronavirus hashtag. Tweets we analysed were posted from March 27 to April 4. The network consisted of a total of 10,140 tweets, which are composed of 1,938 mentions, 4,003 retweets, 759 mentions in retweets, 1,110 replies, and 2,328 individual tweets.

We found that there was a specific Twitter account, set up as @5gcoronavirus19 with 383 followers, which was spreading the conspiracy theory and had become influential in driving it forward on social media. The account was able to send out 303 tweets in seven days. We also found that President Trump was often tagged in tweets and was influential within the network without having tweeted himself. This highlights the point about support for these theories coming from the alt-right.

Out of a total of 2,328 individual tweets, 34.8% of users believed the theory and/or shared views in support of it. For example, one user who we are not identifying due to the ethics on which our study was based tweeted:

5G Kills! #5Gcoronavirus – they are linked! People don’t be blind to the truth!

But 32% denounced the theory or mocked it. For instance, one user noted: “5G is not harming or killing a single person! COVID-19 #5Gcoronavirus”.

A further 33% were just general tweets not expressing any personal views or opinions. Like one user who tweeted: “I have a 10AM Skype Chat on Monday, COVID-19 #5Gcoronavirus”. But this overt lack of support for the conspiracy itself became a problem because as more users joined the discussion, the profile of the topic was raised which allowed it to start trending.

Network clusters

We created a social network graph (above), clustering identified different shapes and structures within the network. The largest group in the network represented an “isolates group”. These groups are typically formed when a user mentions a hashtag in their tweets without mentioning another user. Big brands, sports events and breaking news stories will all have a sizeable isolates group. This suggests that during this time the conspiracy topic had become popular and attracted views and opinions from users who were new to the network.

The second largest network shape resembled a “broadcast” network and contained users that were being retweeted. Broadcast networks can typically be found in the Twitter feeds of celebrities and journalists. The Twitter handle @5gcoronavirus19, which was set up to spread the theory, formed a group of its own resembling a broadcast network shape and it received a number of retweets, showing how the conspiracy theory was being amplified as users retweeted content. Conspiracy theorists are likely to use comments made by influential figures which can add fuel to the fire.

A key example of this would be when the television presenter Eamon Holmes said the media couldn’t say for sure whether the 5G theory was false. These comments fell outside of the time period we studied. But they are likely to have had an impact across social media platforms. Holmes was strongly criticised by Ofcom which noted that his comments risked undermining the public’s faith in science.

The misinformation pandemic

Months before mobile phone masks were attacked in the UK, the “infodemic” (a wide and rapid spread of misinformation) was unfolding at a rapid pace. In France, news spread on Facebook of a tasty cure for the virus: Roquefort cheese. Indeed, a far more dangerous public health prospect than blue cheese, the rumour that cocaine could cure COVID-19 caused the French Ministry of Health to release a warning statement.

Some argue that strange events like this, which erupt from the online world of fake news, memes and misinformation, effectively delivered Trump the US presidency. Given that a survey showed 75% of Americans believed fake news during that election, this claim is not as outrageous as it initially sounds. But there is another theory. Rather than social media activity leading to direct “real world” action, the reverse could be true. For example, a major event like the Arab Spring was a real world action that caused a ripple effect across social media.

Dark forces are still at work on the internet during major events. They seek to spread a fake news agenda and change the way events are perceived and constructed in dangerous ways. Our other research, carried out with Richard Dron from the University of Salford, examined the depictions of Muslims during the Grenfell fire and tracked how Grenfell was covered on Twitter as the fire still burned in the early hours of June 14, 2017. In the following days both celebrities and politicians would be reprimanded for spreading distrust about official accounts of the fire.

A strong denunciation of the 5G and COVID-19 conspiracy from a world leader, when it surfaced, would have helped in mitigating the effect of the theory on the public. But during this time Boris Johnson, the UK’s prime minister, was himself sick with COVID-19. So was there was no direct rebuttal from him.

Although this would have helped we believe the fight should ideally take place on the platform on which the conspiracy is shared. Our ongoing work on fitness influencers demonstrates how popular culture figures with large followings on Twitter and other social media platforms can sometimes have more of an appeal – and be more believable – than “official” accounts or politicians. That is why we believe that governments and health authorities should draw on social media influencers in order to counteract misinformation.

It is also important to note which websites people were sharing around this time, as they are likely to play a key role in the spread and existence of the theory. Unsurprisingly, “fake news” websites such as InfoWars published a number of articles which indicated that there was a link between COVID-19 and 5G technologies. YouTube also appeared as an influential domain, as Twitter users linked to various videos which were spreading the theory.

Worryingly, our study found that a small number of Twitter users were happy to see footage posted of 5G masts being damaged and hoped for more to be attacked. Twitter has been taking action and blocking users from sharing 5G conspiracy theories on the platform. YouTube has also been banning content that contains medical misinformation. It has not been easy for social media platforms to keep up as the pandemic has given rise over ten different conspiracy theories.

One way the public can join the fight against conspiracy theories is to report inappropriate and/or dangerous content on social media platforms and – more importantly – avoid sharing or engaging with them. Meanwhile the mainstream media on public television, newspapers and radio should be doing its part by discussing and dispelling conspiracy theories as they arise.

But social media platforms, citizens and governments need to work together with experts to regain trust and debunk the deluge of fake news and ever evolving theories.

Mutations

Marc Tuters and Peter Knight

The viral conspiracy video, Plandemic is a key example as it has helped coronavirus conspiracy theories spread even more widely into the mainstream. The video – which briefly went viral on YouTube and Facebook until it was taken down – focused on a discredited virologist who promotes the theory that the coronavirus pandemic was a Big Pharma plot to sell vaccines. Although such conspiracy theories are less widespread than the torrent of coronavirus misinformation that is being catalogued and debunked by media watchdog groups, what is particularly concerning is how they are mutating and combining into novel and potentially dangerous forms as different tribes converge and encroach in the mainstream with slick videos involving “real” experts.

With coronavirus, existing 5G conspiracy theories have indeed become supercharged, leading for instance to new protest movements such as the “hygiene protests” in Germany. In these protests, unfamiliar configurations of left and right-wing activists are finding common cause in their shared indignance towards lockdown protocols.

In the past several years Deep State conspiracy theories like Pizzagate and QAnon first developed within reactionary “deep web” communities before spreading into the mainstream, where they were amplified by disinformation bots, social media influencers, celebrities, and politicians. Thriving communities have grown up around these theories, clustered around conspiracy theory entrepreneurs. A significant number of these figures, with some notable exceptions, have pivoted to interpret the coronavirus pandemic through their particular conspiratorial lens. With coronavirus as a common strand connecting these various tribes, the result has been a cross-fertilisation of ideas. Such hybridised conspiracy theories appear to be popping up across all points of the political spectrum and of the web, in contrast to previous cases when they emerged primarily from the margins and spread to the mainstream.

In comparison with previous outbursts of fake news, social media platforms have responded quite proactively to the abundance of coronavirus-related problematic information. Google, for example, curates coronavirus-related search results, meaning that they only return authoritative sources and feature links to those sources where advertisements would usually have appeared.

Platforms have also been much more willing to delete problematic trending content, as with the case of the Plandemic video which YouTube removed within 24 hours – although not before it had reached 2.5 million views. While this kind of banned content inevitably migrates to an “alternative social media ecology” of sites like Bitchute and Telegram, their much smaller audience share diminishes the reach of these conspiracy theories as well as undermining the revenue streams of their entrepreneurs.

In an era in which public distrust of institutions and suspicion of elites is one of the contributing factors in the global rise of national populism, the communication of authoritative knowledge is undoubtedly a challenge for governments.

In this time of enormous uncertainty, capable and honest leadership is one of the only truly effective measures which will help manage the spread of coronavirus misinformation and politicians should be putting party allegiances to one side while confronting the problem. For everyone else this means accepting that short term solutions are unlikely and that people should trust the experts, think before sharing social media content and care for one another.

By Wasim Ahmed, Joseph Downing, Marc Tuters, Peter Knight, for The Conversation

Wasim Ahmed – lecturer in Digital Business, Newcastle University

Joseph Downing – LSE Fellow Nationalism, London School of Economics and Political Science

Marc Tuters – Department of Media & Culture, Faculty of Humanities, University of Amsterdam

Peter Knight – Professor of American Studies, University of Manchester