A new analysis of 32 Russia-linked Facebook and Instagram accounts – which have since been removed – reveal how the Internet Research Agency’s tactics are evolving to circumvent the platforms’ nascent defences against manipulation.

The research comes from University of Wisconsin professor Young Mie Kim, who started analysing posts on Facebook and Instagram from 32 accounts connected to the Internet Research Agency (IRA) back in September 2019. The findings confirm that the IRA has a digital campaign underway targeting the 2020 US election, and that its tactics are growing increasingly brazen and more sophisticated.

Although the accounts in question were removed by Facebook in October 2019 as part of a larger takedown encompassing 75,000 posts, Kim’s research team was able to capture some of the posts prior to their removal. Of the 32 accounts that Kim’s team identified as exhibiting IRA attributes, 31 were confirmed as having links to the troll factory by Graphika, a social media analysis company commissioned by Facebook to examine the operation.

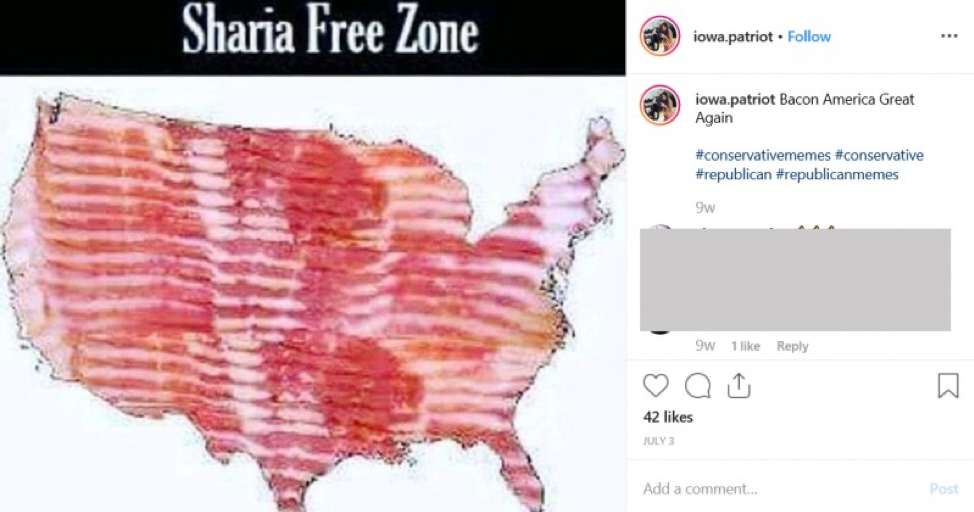

An anti-Muslim Instagram post by an IRA account from 3 July 2019.

Image courtesy of Project Data

Combining old and new to evade detection

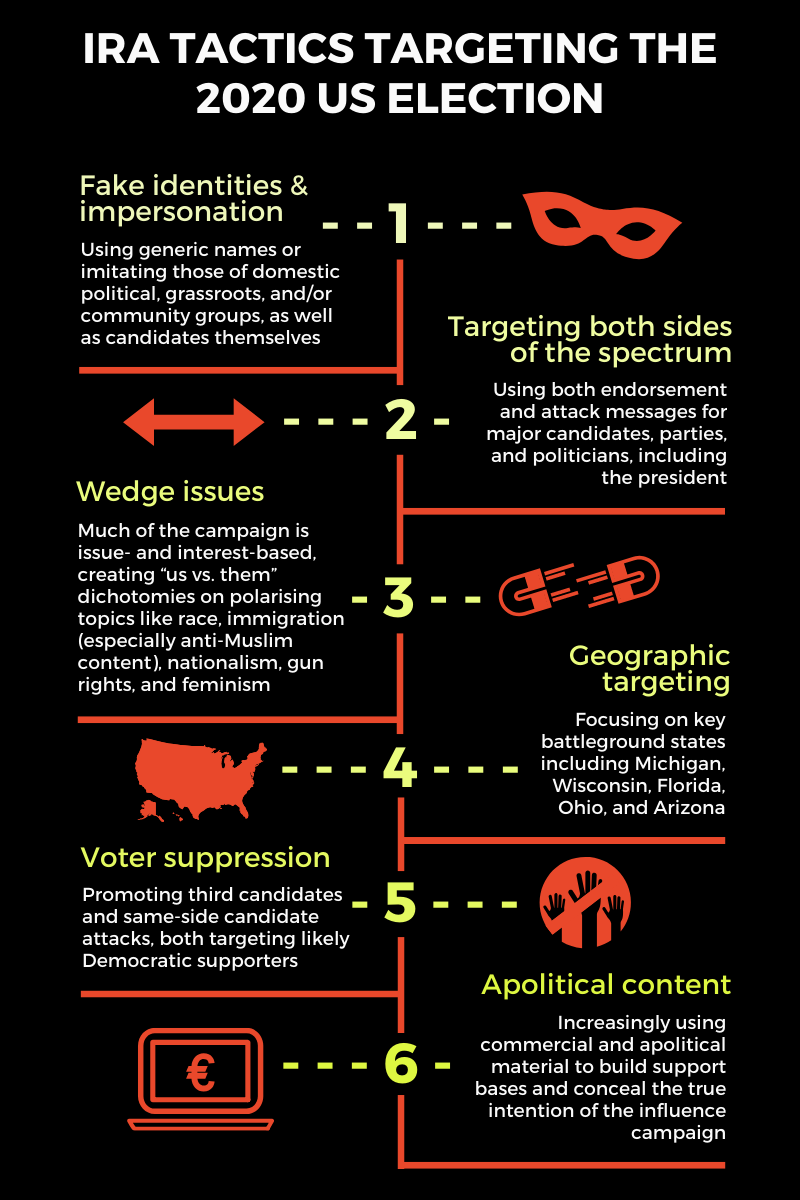

Some of the IRA’s strategies and tactics were the same as before: impersonating Americans, including political groups and candidates, and attempting to sow partisan division by targeting both sides of the political spectrum with posts designed to incite outrage, fear, and hostility. Much of the activity was also aimed at discouraging certain groups from voting – a common tactic also used in other elections – and focused on swing states (namely Michigan, Wisconsin, Florida, Ohio, and Arizona). This voter suppression strategy notably used same-side candidate attacks, which aim to splinter the coalition of a given side, as well as the promotion of “third candidates” (e.g., Rep. Tulsi Gabbard).

But the posts also show how the IRA’s methods are growing increasingly sophisticated and audacious. Its trolls have gotten better at impersonating candidates and parties, for example by mimicking the logos of official campaigns with greater precision. They have also moved away from creating their own fake advocacy groups to imitating and appropriating the names of actual American groups. Finally, they have increased their use of apparently apolitical and commercial content in an effort to obscure their attempts at political manipulation. These efforts to better imitate authentic user behaviour appear designed to evade detection as platforms adopt greater transparency measures and defences against manipulation, focusing on coordinated inauthentic behaviour. Improvements in operational security also helped the IRA accounts appear less conspicuous.

Protecting elections is a matter of urgency

In light of these findings, as well as new revelations that the Internet Research Agency has been outsourcing its work to troll farms in Africa in pursuit of plausible deniability, it is clear that the challenges of safeguarding our electoral processes from foreign intervention are more grave and urgent than ever. Ensuring electoral integrity in the face of these evolving manipulation tactics requires comprehensive regulatory solutions for digital political campaigning and distortive practices like astroturfing, as well as more stringent transparency requirements for online platforms. Such measures could include thorough cross-platform archives of political campaigns to help researchers and public authorities track digital political advertising and expose malign influence efforts before they can obtain traction.