By Sander van der Linden, Jon Roozenbeek, for The Conversation

One high-profile theory of why people share fake news says that they aren’t paying sufficient attention. The proposed solution is therefore to nudge people in the right direction. For example, “accuracy primes” – short reminders intended to shift people’s attention towards the accuracy of the news content they come across online – can be built into social media sites.

But does this work? Accuracy primes do not teach people any new skills to help them determine whether a post is real or fake. And there could be other reasons, beyond just a lack of attention, that leads people to share fake news, such as political motivations. Our new research, published in Psychological Science, suggests primes aren’t likely to reduce misinformation by much, in isolation. Our findings offer important insights into how to best combat fake news and misinformation online.

The concept of priming is a more or less unconscious process that works by exposing people to a stimulus (such as asking people to think about money), which then impacts their responses to subsequent stimuli (such as their willingness to endorse free-market capitalism). Over the years, failure to reproduce many types of priming effects has led Nobel laureate Daniel Kahneman to conclude that “priming is now the posterchild for doubts about the integrity of psychological research”.

The idea of using it to counter misinformation sharing on social media is therefore a good test case to learn more about the robustness of priming research.

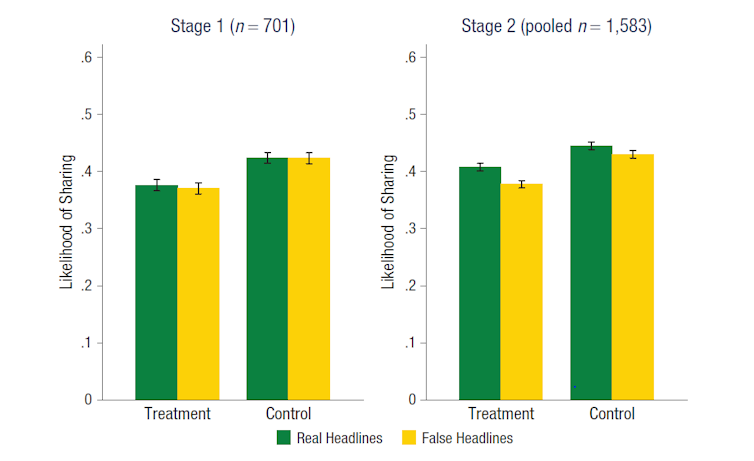

We were asked by the Center for Open Science to replicate the results of a recent study to counter COVID-19 misinformation. In this study, two groups of participants were shown 15 real and 15 false headlines about the coronavirus and asked to rate how likely they were to share each headline on social media on a scale from one to six.

Before this task, half of the participants (the treatment group) were shown an unrelated headline, and asked to indicate whether they thought this headline was accurate (the prime). Compared to the control group (which was not shown such a prime), the treatment group had significantly higher “truth discernment” – defined as the willingness to share real headlines rather than false ones. This indicated that the prime worked.

To maximise the chance of a successful replication, we collaborated with the authors on the original study. We first collected a sample large enough to reproduce the original study’s findings. If we didn’t find a significant effect in this first round of data collection, we had to collect another round of data and pool it together with the first round.

Our first replication test was unsuccessful, with no effect of the accuracy prime on subsequent news sharing intentions. This is in line with replication results of other priming research.

For the pooled dataset, which consisted of almost 1,600 participants, we did find a significant effect of the accuracy prime on subsequent news sharing intentions. But this was at about 50% of the original study’s intervention effect. That means that if we picked a person at random from the treatment group, the likelihood that they would have improved news sharing decisions compared to a person from the control group is about 54% – barely above chance. This indicates that the overall effect of accuracy nudges may be small, consistent with previous findings on priming. Of course, if scaled across millions of people on social media, this effect could still be meaningful.

We also found some indication that the prime may work better for US Democrats than for Republicans, with the latter appearing to barely benefit from the intervention. There could be a variety of reasons for this. Given the highly politicised nature of COVID-19, political motivations may have a large effect. Conservatism is associated with lower trust in mainstream media, which may lead some Republicans to evaluate credible news outlets as “biased”.

Priming effects are also known to disappear rapidly, usually after a few seconds. We explored whether this is also the case for accuracy primes by looking at whether the treatment effect occurs disproportionately in the first few headlines that study participants were shown. It appears that the treatment effect was no longer present after participants rated a handful of headlines, which would take most people no more than a few seconds.

Ways forward

So what’s the best way forward? Our own work has focused on leveraging a different branch of psychology, known as “inoculation theory”. This involves pre-emptively warning people of an impending attack on their beliefs and refuting the persuasive argument (or exposing the manipulation techniques) before they encounter the misinformation. This process specifically helps confer psychological resistance against future attempts to mislead people with fake news, an approach also known as “prebunking”.

In our research, we show that inoculating people against the manipulation techniques commonly used by fake news producers indeed makes people less susceptible to misinformation on social media, and less likely to report to share it. These inoculations can come in the form of free online games, of which we’ve so far designed three: Bad News, Harmony Square and Go Viral!. In collaboration with Google Jigsaw, we also designed a series of short videos about common manipulation techniques, which can be run as ads on social media platforms.

Other researchers have replicated these ideas with a related approach known as “boosting”. This involves strengthening people’s resilience to micro-targeting – ads that target people based on aspects of their personality – by getting them to reflect on their own personality first.

Additional tools include fact-checking and debunking, algorithmic solutions that downrank unreliable content and more political measures such as efforts to reduce polarisation in society. Ultimately, these tools and interventions can create a multi-layered defence system against misinformation. In short: the fight against misinformation is going to need more than a nudge.

By Sander van der Linden, Jon Roozenbeek, for The Conversation

Sander van der Linden – Professor of Social Psychology in Society and Director, Cambridge Social Decision-Making Lab, University of Cambridge

Jon Roozenbeek – Postdoctoral Fellow, Psychology, University of Cambridge.

Disclosure statement: Sander van der Linden consults for and receives funding from Google Jigsaw, EU Commission, Facebook, Edelman, ESRC, and the UK Government. Jon Roozenbeek receives funding from the ESRC, Google Jigsaw, and the UK Cabinet Office.