By Laura Hazard Owen, for NiemanLab

When people saw that a questionable piece of content had been liked and shared lots of times, they were more likely to share it themselves

It’s time to rethink engagement metrics, say the authors of a paper published last week in the Harvard Kennedy School’s Misinformation Review. Mihai Avram, Nicholas Micallef, Sameer Patil, and Filippo Menczer found that “the display of social engagement metrics” — visible displays of how many times a piece of content has been liked and shared, i.e. the norm on Facebook, Twitter, and Instagram — “can strongly influence interaction with low-credibility information. The higher the engagement, the more prone people are to sharing questionable content and less to fact-checking it.”

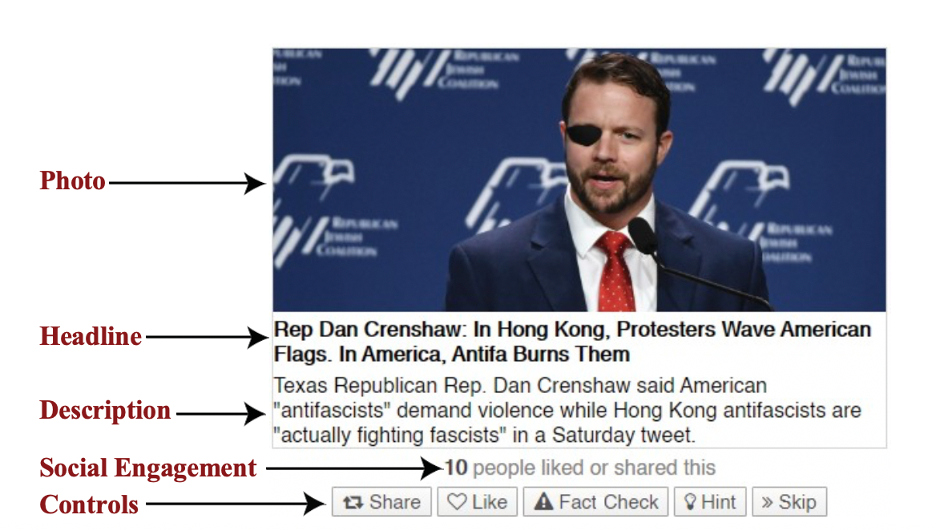

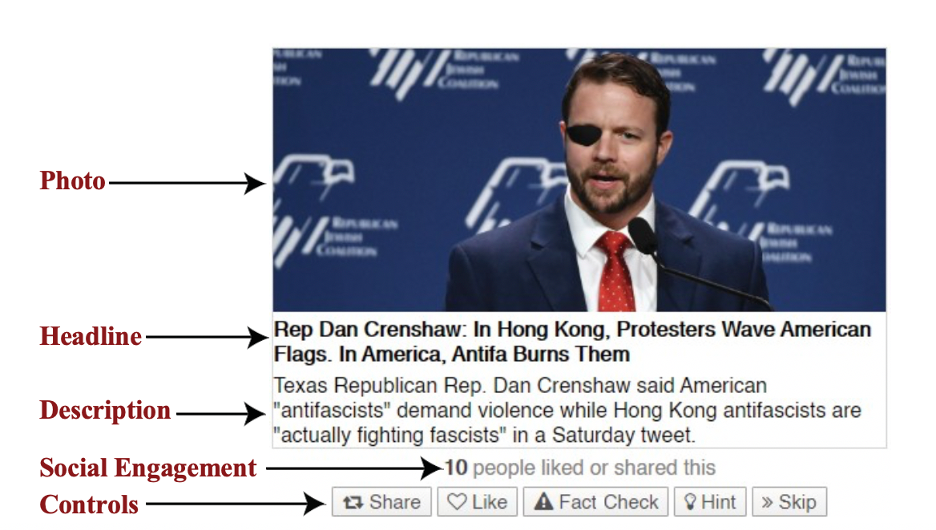

The researchers developed an online news literacy game called “Fakey” that “simulates fact-checking on a social media feed”; it’s available in a web browser or as an Apple or Android app. (Yes, you can play it!) Between May 18 and November 2019, the researchers recorded game sessions from 8,606 players with 120,000 news articles, about half of which were from “low-credibility sources.” 78% of the players were from the U.S.

Each time the players were presented with an article, they could choose to share, like, or fact-check it. (They earn points when they like or share news from mainstream sources and when they fact-check news from suspect sources; there’s more in the paper on which sources were included.) Here’s an example:

The researchers write: Player behavior in the Fakey game shows near-perfect correlations between displayed social engagement metrics and player actions related to information from low-credibility sources. We interpret these results as suggesting that social engagement metrics amplify people’s vulnerability to low-credibility content by making it less likely that people will scrutinize potential misinformation while making it more likely that they like or share it. For example, consider the recent disinformation campaign video “Plandemic” related to the COVID-19 pandemic. Our results suggest that people may be more likely to endorse the video without verifying the content simply because they see that many other people liked or shared it.

This research coincides well with Facebook’s recent griping about New York Times tech reporter Kevin Roose’s posting of the most-engaged news stories on Facebook. On Twitter, Roose regularly posts the top 10 news stories on Facebook according to CrowdTangle. The Verge’s Casey Newton wrote recently:

[W]hat he has found, for the most part, is that the most popular stories come from right-leaning publishers and pages. On this day in June, the top stories came from Donald Trump, Franklin Graham, Fox News, and Ben Shapiro. Later that month: Franklin Graham, Fox News, Mark Levin. On [July 20]: a sea of Fox News and Ben Shapiro, punctuated by a lone link from the liberal page Occupy Democrats.

Roose’s tweets in this format go — if not viral, exactly, then at least further around the timeline than your average publisher data set. Let me say: I have retweeted these tweets. I have retweeted them because, in an era where Congress has held multiple hearings inveighing against “bias” against conservatives on social networks, the data suggested that the opposite has been true all along: that social networks have been a powerful ally to the conservative movement, helping it to reach a much wider audience than it ever would have otherwise.

It is also true that these tweets have been driving people at Facebook absolutely crazy. And the reason is that the way CrowdTangle measures the popularity of partisan links is not the way that Facebook, which owns the tool, thinks that we ought to be measuring popularity.

John Hegeman, Facebook’s VP of News Feed, pushed back, saying that while, okay, CrowdTangle is accurate, the most engaged-with content on Facebook isn’t actually the stuff that most people see.

Facebook has continued doling out some proprietary data on Twitter.

But until all this is available publicly, we’ll have to rely on the CrowdTangle data. Roose created a separate Twitter account just for that, and you can follow it here.

By Laura Hazard Owen, for NiemanLab